Exploring the Potential of XR Technology for Virtual Decoration

- 4 minutes read - 816 words

In recent years, XR technology has revolutionized the way we decorate our physical environments. By utilizing AI and computer vision, XR can scan and track real-world surroundings, recognizing and remembering images and objects to enable the addition of virtual content. This technology can store and recall virtual decorations, making them visible every time someone enters the environment. While image matching is not a new concept, XR platforms like Vuforia have already implemented various mobile XR examples. We experimented with smart glasses from Lenovo, allowing users to customize their surroundings and view the world in a unique way. In this article, we will explore the potential of XR technology for virtual decoration, not only in personal settings but also in various industries, including conferences, events, shopping, and trade fairs.

🎍Decorate your world

The XR enthusiasts (Sourav das, Sai Charan Abbireddy) have open-sourced an app DecoAR, that is built while experimenting the decoration using XR image tracking capability. You just need to take a few pictures of your environment where you want to decorate, and apply the virtual decoration in the form of a digital picture, video, 3D models or more. Enjoy it in XR!

Here are steps a developer can follow and decorate the world. Welcome the developer community to extend it more and build concepts that allow dynamically changing the content by end users. Currently it supports ThinkReality A3, and needs Spaces SDK for Unity to run the application.

Project Setup

Follow the steps below to set up the projects.

- Check out the project from git repo

git clone git@github.com:xrpractice/DecoAR.git

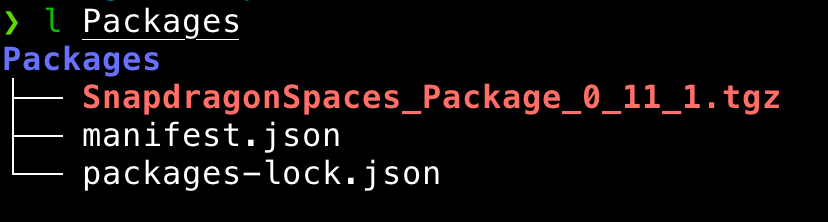

- Download “Spaces SDK for Unity” from https://spaces.qualcomm.com/download-sdk/. Unzip it. Navigate to the files that we unzipped and copy the SnapdragonSpaces_Package_0_11_1.tgz from the “Unity package” folder to “Packages” folder in the project.

- Open project in Unity 3D (Unity 2021.3.9f1 onward with android build support)

- Go to Assets> Scenes and Open DecoAR scene

DecoAR Scene

Let’s understand the Deco AR scene. It contains ARSessionOrigin and ARSession components to provide AR capability. They are linked to the AR foundation by attaching ARInputManager and ARTrackedImageManager to ARSession and ARSessionOrigin respectively.

We also have an ImageTrackingController, Which helps to keep track of our current tracking image

Refer detailed documentation from spaces here.

Map the Area to Decorate

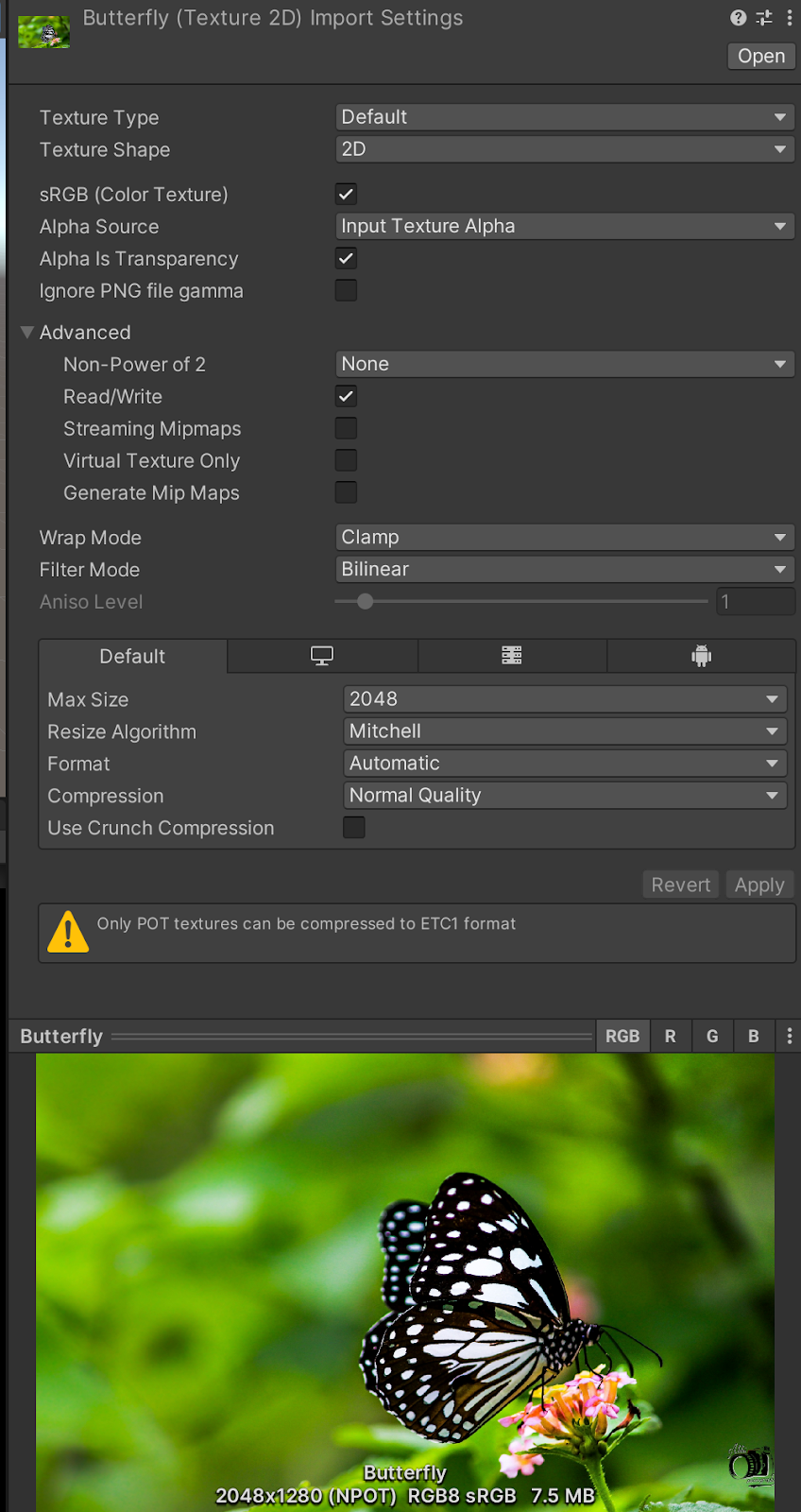

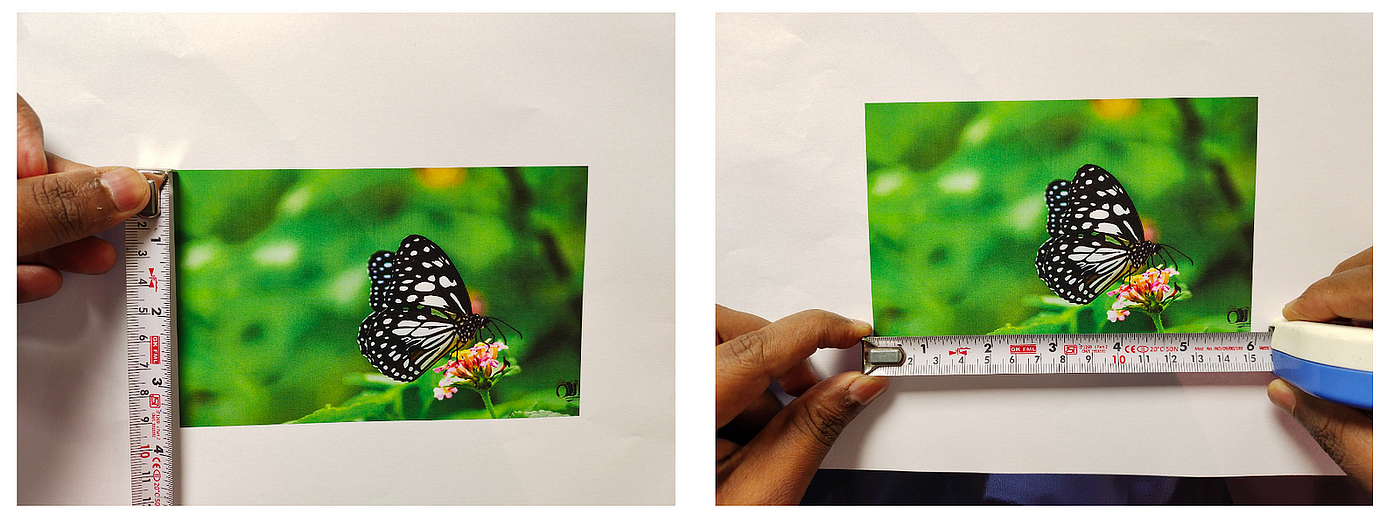

Identify the area and objects you want to decorate, for example the below butterfly picture placed near a meeting room in my office and I want to virtually decorate it.

Here are steps I need to perform to track this picture on the wall.

-

Take out your phone and click a picture and put it in the Assets/Resources folder. Change its properties : Alpha is Transparency : Checked, Wrap Mode : Clamp and Read/Write : Checked

Picture Credit : https://www.itl.cat/pngfile/big/185-1854278_ultra-hd-butterfly-wallpaper-hd.jpg

Picture Credit : https://www.itl.cat/pngfile/big/185-1854278_ultra-hd-butterfly-wallpaper-hd.jpg -

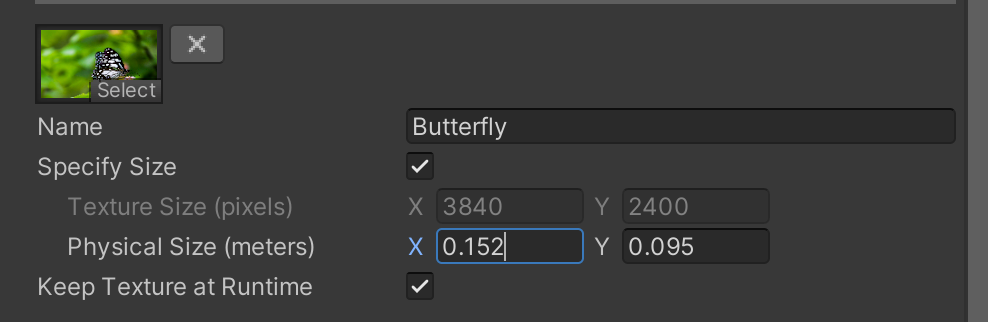

Next we need to add this image for tracking. Go to Assets > Image Libraries > ReferenceImageLibrary and “Add Image” on the bottom. Drag and drop the image from Resources folder into the newly added row.

-

And Measure the Picture length and height

-

Specify the size in meters in the Library, and “Keep texture at Runtime” checked

eXtending the Decoration

This is where we will define what will happen when the images we configured above are tracked.

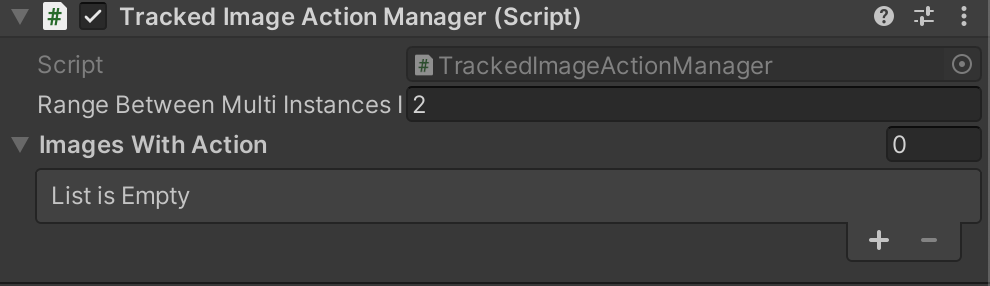

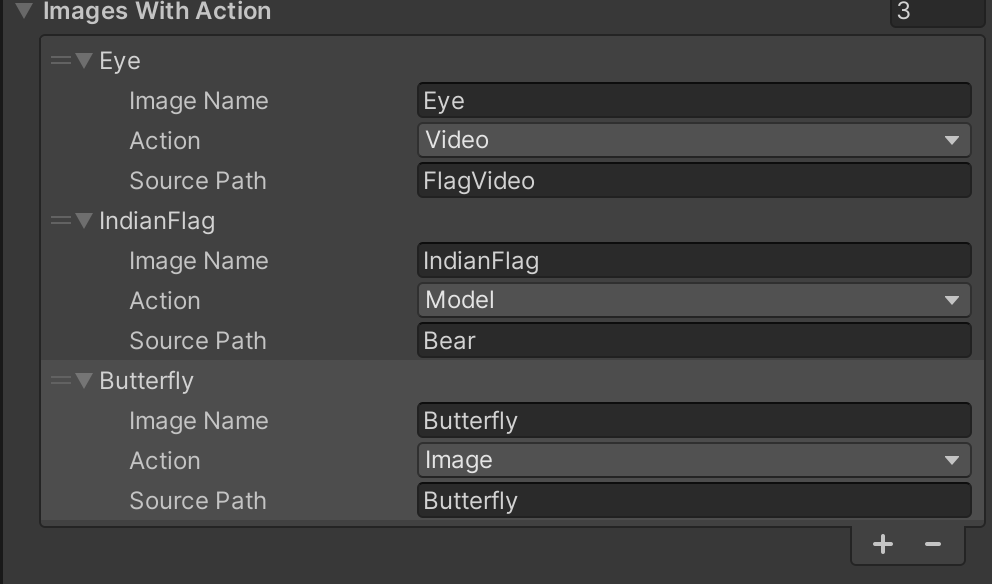

- Locate the Prefab called PrefabInstatiater Under Assets/Resources/Prefabs folder. In Tracked Image Action Manager. We have

- Range Between Multi Instances — Specifies the range in meters to instance the same action on an already tracked image.

- Images With Action — Specifies the actions on tracked images.

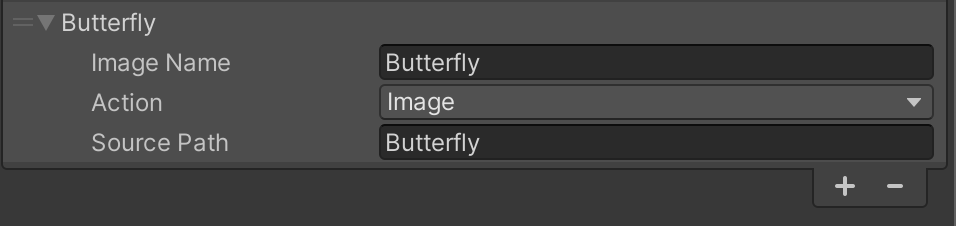

- Expand the Images With Action , It has three fields.

- ImageName — On which tracked image, the current action needs to be performed

- Action — Specify the action, Supports Image, Video, Audio and 3D Model

- SourcePath — Give the source relative to the “Resources” folder without any extension. Action and source should be the same.

For Example, On tracking of Butterfly image, We are rendering the same Butterfly image on top of it.

Similarly, we can track and configure other images as follows.

That’s it, configuration is all done. Now build the app and

🥽 Experience in XR

Just build the app and install it in the ThinkReality A3. Run the DecoAR app, and it will start tracking the images as soon as it opens the app.

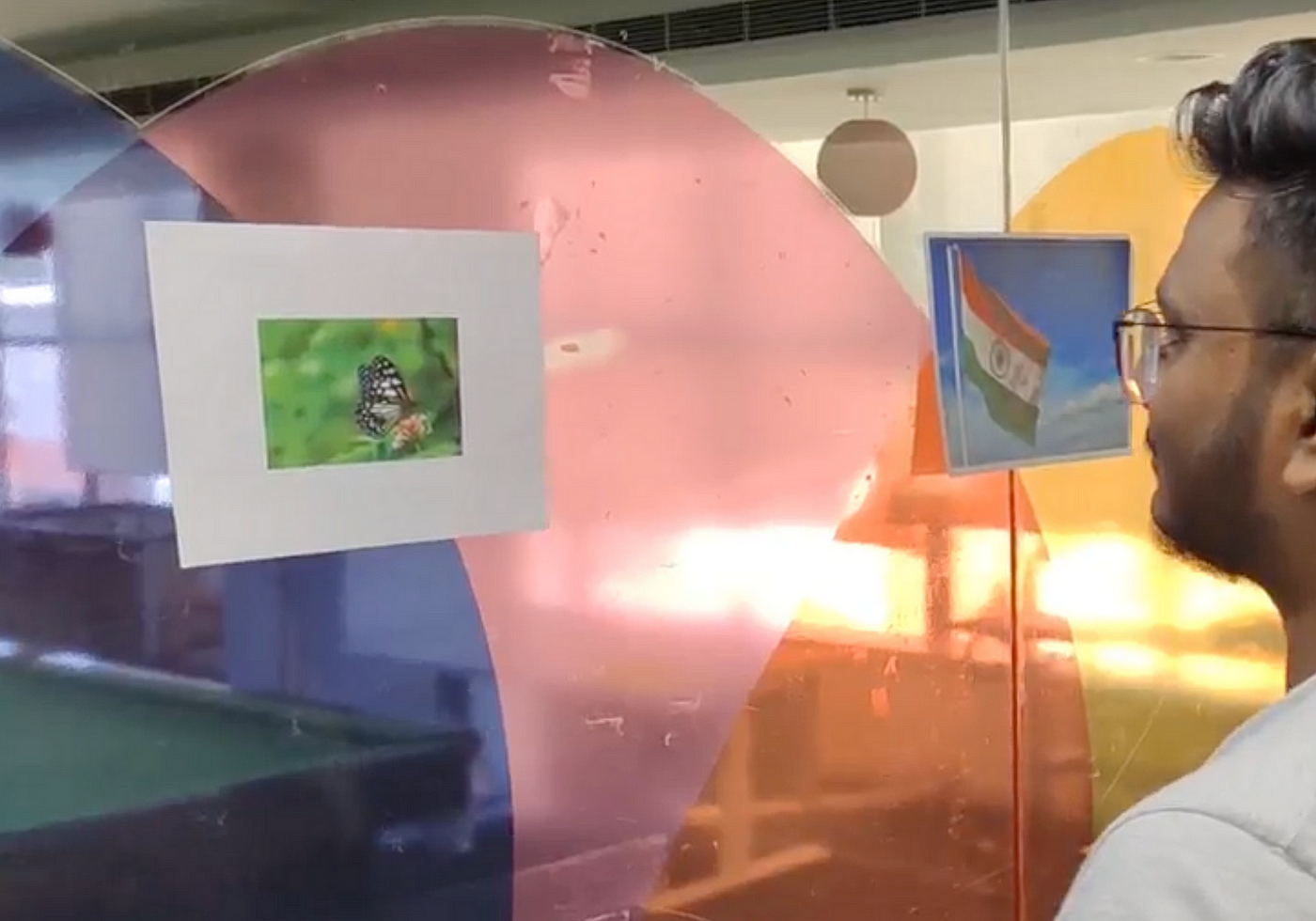

Here is a quick demo.

☑️ Conclusion

Tracking objects and images in the environment is now a prevalent feature in upcoming XR devices. ThinkReality XR devices also offer this capability with their Spaces SDK. There are numerous use cases that can be built on these features, and we have showcased one of them. However, these features require high computing power and device resources. Imagine the possibilities for evolution in these cases with the support of 5G/6G internet and edge computing. This has the potential to make the real world more engaging and interconnected, ultimately realizing the Real World Metaverse vision of Niantic and likes of it.

It is originally published at XR Practices Medium Publication

#xr #ar #vr #mr #metaverse #lenovo #ai #image-processing #technology #thoughtworks #qualcomm #spaces #decoration