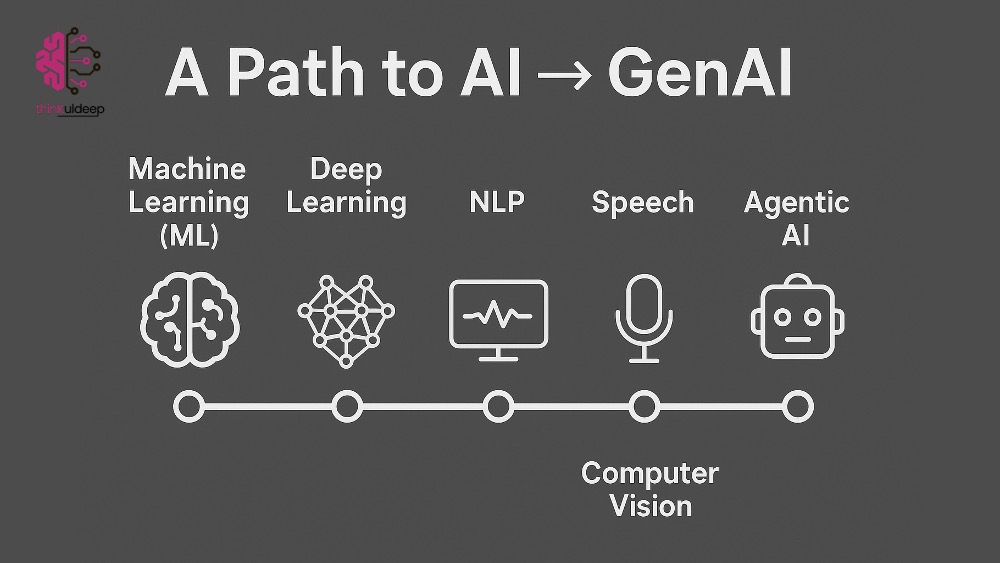

A Path to AI → GenAI → Agentic AI

- 5 minutes read - 1023 wordsThe world of technology is evolving at lightning speed. Every week, new AI models, frameworks, and services make headlines. With the hype around LLMs, Agentic AI, MCPs, and A2A systems, it’s easy to feel like we’re always playing catch-up.

But here’s the truth: without strong fundamentals, chasing the “next big thing” is like building a skyscraper on sand. A strong foundation ensures stability. That’s why, before diving deep into Generative AI, it’s critical to revisit the path that got us here.

This article is structured as a learning guide — a quick explainer — so you can use it to build the right base for your GenAI journey (and even prepare for AI/GenAI certifications).

We’ll cover the evolutionary path:

Machine Learning → Deep Learning → Generative AI → NLP → Speech → Computer Vision → Agentic AI

1. Machine Learning (ML): The Starting Point

At its core, machine learning (ML) is about teaching machines to learn from experience, just as humans do. Models are essentially mathematical equations trained on large datasets to predict outcomes (labels) based on given inputs (features).

The process of building ML models involves training on datasets, adjusting parameters, validating results on test data, and repeating this cycle until the results are satisfactory.

Two primary techniques define ML:

- Supervised learning: where we have labeled data with known outcomes.

- Unsupervised learning: where no labels exist, and the machine must find hidden patterns or groupings.

1.1 Supervised Machine Learning

It is generally built on historical observations where we have clear relation between outcome (label — y) and parameters ( x1, x2, x3…). and a model can be derived to represent that relationship in a mathematical form the model.

y = f(x1, x2, x3, …)

With this, we can predict outcomes for new data.

- Regression is used when predicting numeric values (e.g., predicting rainfall based on temperature and wind speed).

- Classification is used when predicting categories (e.g., diagnosing diseases from patient records).

- Binary classification: two categories (true/false, positive/negative).

- Multiclass classification: more than two categories.

Key steps in supervised learning include:

- Splitting data into training and test sets.

- Applying algorithms like Linear Regression (for regression) or Logistic Regression (for classification).

- Evaluating results using techniques such as confusion matrices, F1 score, MAE, MSE, or R².

- Iterating until results reach an acceptable accuracy.

1.2 Unsupervised Machine Learning

In contrast, unsupervised learning deals with data that has no labels. The goal is to uncover structure and relationships hidden within the data.

A common technique is K-Means clustering, where data points are grouped into clusters based on similarity. The algorithm keeps adjusting centroids until the clusters stabilize.

Evaluation here involves measuring separation between clusters — using metrics like silhouette scores or distances from cluster centers.

In many real-world scenarios, hybrid approaches combine supervised and unsupervised methods, allowing clusters to be labeled and then used for prediction tasks.

2. Deep Learning: Inspired by the Brain

Deep learning takes ML a step further by mimicking how the human brain processes information through neural networks.

Each artificial neuron applies a function to inputs, weighted by importance, and passes the output through an activation function to decide whether the signal continues.

Through repeated training (epochs), weights are adjusted using methods like gradient descent until errors are minimized. Networks with many layers are called deep neural networks (DNNs), which can handle complex tasks in regression, classification, natural language, and computer vision.

3. Generative AI: The Creative Leap

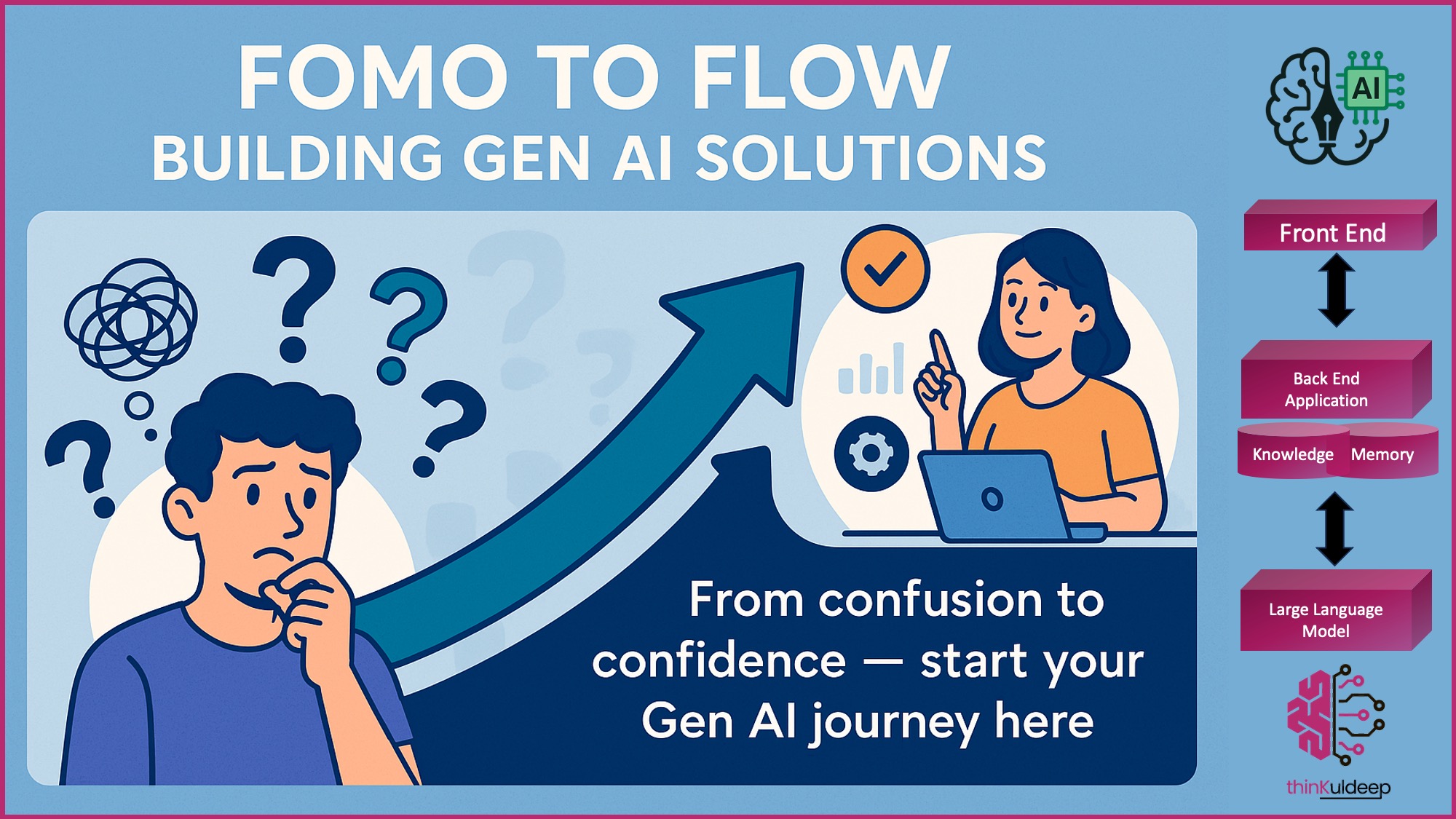

Generative AI (GenAI) represents a leap forward. Unlike traditional ML, it doesn’t just predict — it creates. With natural language prompts, GenAI can produce text, images, code, audio, and more.

We have covered Generative AI Fundamental in recent article on Building Gen AI solutions.

The evolution here is tied to natural language processing (NLP):

- Early methods relied on tokenization and embeddings to represent words numerically.

- RNNs allowed sequential predictions but struggled with long contexts.

- Transformers revolutionized NLP by enabling parallel processing and introducing attention mechanisms.

This led to powerful transformer architectures like:

- BERT (encoder-based, by Google) for understanding context.

- GPT (decoder-based, by OpenAI) for generating coherent content.

Today’s LLMs are built on these foundations. Gen AI advancement has good impact on traditional AI ways of natural language, audio processing, and computer visions.

4. Natural Language Processing (NLP)

Core NLP tasks include:

- Language detection

- Sentiment analysis

- Named entity recognition

- Text classification

- Translation

- Summarization

- Conversational AI

Many cloud providers now bundle most of these into ready-to-use services over LLMs, but understanding the underlying mechanics helps us better use and evaluate them.

5. Speech Processing

Modern speech systems convert speech to text and text to speech in real time. Thanks to deep neural networks, today’s synthetic voices sound natural — closing the gap between human and machine communication.

These capabilities power applications in accessibility, customer support, and live transcription.

6. Computer Vision

Computer vision enables machines to interpret visual information. Traditionally, convolutional neural networks (CNNs) dominated this field, excelling at tasks like image classification, object detection, and segmentation.

But now, transformers and multimodal models extend these abilities further. By combining image encoders with text embeddings, models can understand and generate across modalities — describing images in natural language or generating images from text.

7. Agentic AI: The Next Stage

Agentic AI systems can not only process information but take autonomous actions, chaining multiple models and tools together.

- Example: AI agents booking a flight, writing an itinerary, and syncing your calendar — without manual intervention.

- Built on strong ML, DL, and GenAI foundations.

Conclusion

Generative AI is redefining how we interact with technology, but the path here matters. Without grounding in ML, DL, NLP, and CV, it’s easy to misuse or overestimate GenAI.

Think of this journey as layers of a course:

- ML (prediction)

- DL (complex data handling)

- GenAI (creation)

- NLP, Speech, CV (specializations)

- Agentic AI (autonomy)

✨ Certification Tip: Most GenAI certifications (from Google, Microsoft, AWS, or Stanford/DeepLearning.AI) expect you to understand this progression, not just how to call an API.

By stepping back, reinforcing fundamentals, and then advancing, we ensure that our GenAI journey isn’t just hype-driven — but future-ready.

All the best — thinkuldeep.com 🙌

A variation of this article is originally published at AI Practices Publication

#evolution #ai #genai #technology #prompt #tutorial #learnings #fine-tuning #development #embeddings #agenticai #future #practice